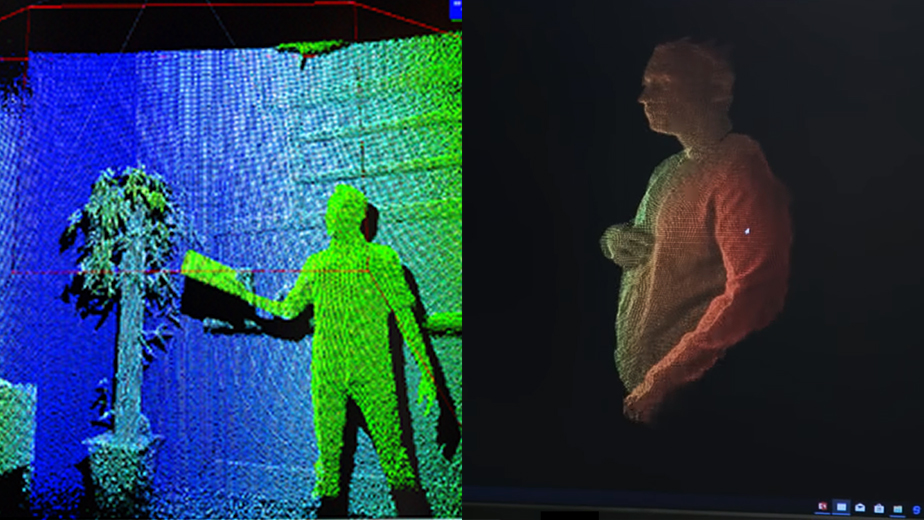

The company is now ready to share more, and its demo looks very promising. At the Microsoft Research Faculty Summit, Andrew Duan showed off some impressive real-time image quality. Through the use of a test unit, Duan shows a 3D point cloud generated by a linked PC. It shows individual folds in shirts and facial features, all while capturing movement with stability.

Importantly, it also removes a major issue with the company’s previous sensor. Project Kinect can still provide good quality depth data if the object is close to the camera, with a noticeably wider field of view.

A Huge Step Forward

Project Kinect’s improvements are powered by a significant leap in specs, providing a resolution of 1024×1024, automatic pixel gain selection for HDR, and 225-950mw power consumption. The result is far better quality than previous Kinect versions, which are so inaccurate they’re still used in ghost hunting. Microsoft plans to provide organizations with the technology for use in computer vision, for use in scenarios like warehouse capacity. In one example, Microsoft shows a live feed with total warehouse capacity, shortage costs, and more, with on-board computer in the sensors. When it eventually releases, the next-generation of HoloLens will also use the tech, enabling more accurate placement of virtual objects or gesture detection. It’s not yet clear when Project Kinect will launch, but the demo shows a very functional experience. Duan says to stay tuned for further information on the official site.